“AI” is not one thing.

Getting to know the different types will empower you as a buyer.

If I told you a new solution was using artificial intelligence, what would that mean to you? You may think of a chatbot like OpenAI’s ChatGPT or an image generator like DALL-E, but AI is a diverse set of technologies, each with unique capabilities. Despite its recent rise in popularity, AI is not new; applications of AI have been adding value in businesses since the 1970s. As a leader responsible for defining strategy and purchasing technology, answering the following questions will help you make informed decisions and avoid falling prey to sales and marketing hype.

Is it Artificial Intelligence?

First, let's define artificial intelligence. The ‘artificial’ simply means we’re talking about the “intelligence of machines or software, as opposed to the intelligence of humans or animals”.1 Notice the definition doesn’t state the magnitude of the intelligence the machine/software must possess. AI can be anything from a set of if-then rules written by an expert, to an artificial general intelligence (AGI) where the machine/software “could learn to accomplish any intellectual task that human beings or animals can perform”.2 Given this broad definition you can see why so many vendors add AI to their websites! The key question for leaders becomes: what’s the nature of AI in the solution I am evaluating?

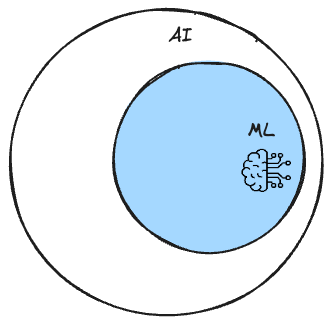

Is it Machine Learning?

It’s safe to assume most vendors who say they’re leveraging AI today are using machine learning (ML). Unlike the if-then solution described above, ML does not require an expert to predefine the rules. Instead it learns the rules by analyzing carefully prepared data (called features3) and identifying patterns and structures within. ML algorithms are valuable to businesses because they can identify these structures across large quantities of data, define rules which represent the identified structures, and then apply these rules to new data to make predictions. Practical applications from long before the current AI hype cycle include customer segmentation, forecasting, recommendation systems, fraud detection, algorithmic trading, route optimization, credit scoring, sentiment analysis, and many more.

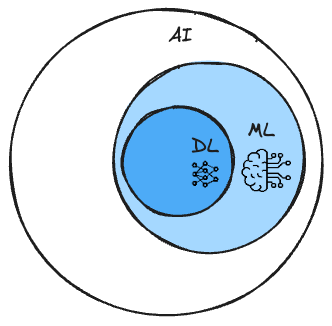

Is it Deep Learning?

Deep learning (DL), a key driver of the current AI revolution, is a sophisticated form of machine learning. It's built on artificial neural networks4 (ANNs), which are inspired by neurons in the human brain but are much simpler. The “deep” simply means the network consists of multiple layers between the input and output layer. Each layer transforms its input data into increasingly abstract and complex representations. This structure allows DL to automatically extract important features from data, removing the need for manual feature engineering. Breakthroughs in algorithms and hardware over the past decade have enabled DL to improve on multiple ML techniques, particularly in highly complex and unstructured data, and led to widely known examples like AlphaGo5. Interestingly, for tasks that involve making predictions on structured data, like the tabular data in a spreadsheet, DL models tend to be outperformed by traditional ML methods.

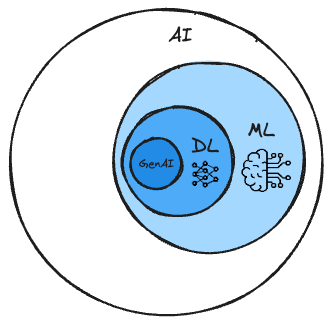

Is it Generative AI?

Generative AI (GenAI) has existed for many years, but recent advances in deep learning, highlighted by the development of models like the transformer6, have significantly increased its capabilities. These advances enable GenAI models to efficiently learn patterns and structures from input data and create new, similar content. GenAI stands out in machine learning for its nascent abilities to seemingly model the world, reason, plan, and take action, venturing into realms once exclusive to human creativity. While many amazing consumer technologies are being created using GenAI, such as sophisticated virtual assistants and generated art, music, and video, the implications for businesses are enormous. By lowering the marginal cost of creativity and intelligence, GenAI amplifies human capabilities across industries dramatically increasing the productivity of both the white- and blue-collar workforce.

Now, be curious and ask questions.

The most important advice I could give business and technology leaders evaluating a new technology is to be intellectually curious and ask questions. Obviously the first question you need to answer is, “What business problem(s) does this AI solution solve?” For help answering this, please take a look at my previous post on building an AI Initiative Inventory. Assuming this is answered favorably, and equipped with the basic understanding of the types of AI provided above, you should now be comfortable asking the question, “What type of AI does your solution utilize?” When they answer, listen carefully for the following:

Do they refer to specific types of AI like machine learning, deep learning or generative AI? This is a good indicator there is something real codified in their solution. Ask follow up questions like, “Can you give me examples of this type of AI being successfully applied in other fields or consumer applications?” Also, “What are the limitations of this type of AI?”.

Do they explain the application of this AI in terms of how it amplifies human capabilities, translating to an increase in productivity? How would a capable human solve the problem their solution does, and in what ways is their methodology better?

Do they talk about how their models are “continually learning and improving” as they receive new data? If they do, ask them how often their models are retrained or to explain how their reinforcement learning7 approach works. In many business sales scenarios this will be marketing/sales speak. Other than through complicated, emerging, and/or computationally expensive techniques, most business solutions leveraging AI only incorporate new learnings when they are retrained.

Finally, make sure you ask them if your proprietary data will be used to train their AI models for other customers. It's crucial to understand how your data might be utilized beyond your own use case, especially in terms of privacy and competitive advantage. This question will help assess the vendor's commitment to data confidentiality and whether their solution might inadvertently benefit your competitors.

As always, the quick section below will dive deeper into the technology, so keep reading but only if you want to geek out!

Most popular applications of AI improve along with advances in the underlying ML, DL, and GenAI technologies. This makes sense when you understand how they relate to and build upon each other. Lets walk through three highly visible and valuable examples business leaders should be aware of.

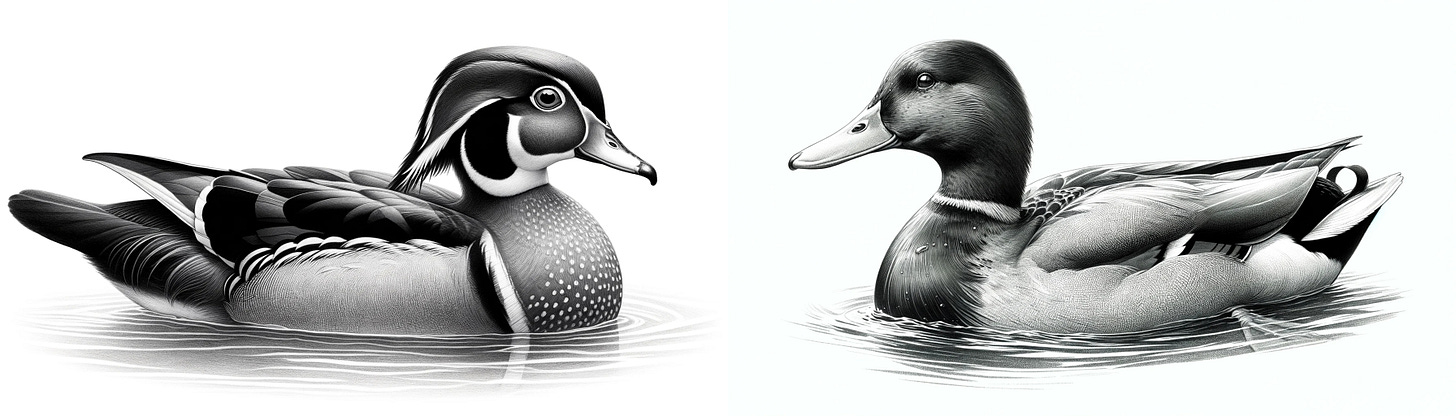

Notice how computer vision8, itself a type of AI, overlaps in our diagram with ML, DL, and GenAI. Computer vision enables computers to interpret and process visual information from the world, similar to human vision. Using digital images from cameras and videos, along with ML, DL, and GenAI models, it can accurately identify and classify objects, and then react to what it "sees." Advances in ML and DL have significantly improved the accuracy and efficiency of computer vision systems, enabling them to process complex images with greater precision. GenAI breakthroughs contribute by creating new, varied training data, enhancing the robustness and adaptability of computer vision models in real-world scenarios. State-of-the-art examples include autonomous drones using advanced image recognition for efficient delivery, AI-driven quality control systems in manufacturing that detect minute defects with high accuracy, and real-time facial recognition technologies enhancing security and personalized customer experiences.

Natural Language Processing9 (NLP) is another type of AI that combines computational linguistics with ML, DL, and GenAI and enables computers to understand, interpret, and respond to both written and spoken human language. Recent advances are revolutionizing NLP by enabling more complex language models that can better grasp context, subtleties, and nuances in human communication, leading to more effective and natural interactions between humans and machines. State-of-the-art examples include advanced chatbots that provide highly personalized customer service experiences, AI-driven sentiment analysis tools for real-time market and consumer insights, and sophisticated language translation services that enable seamless international business communication.

Robotics10 is a broad field of technology that involves the design, construction, operation, and use of robots, integrating concepts from computer science, engineering, and AI. This field focuses on creating machines capable of performing tasks in various environments, often those that are too dangerous or tedious for humans, by mimicking human actions or autonomously making decisions based on programmed instructions or AI. Advances across ML, DL , GenAI, computer vision, and NLP are enabling robots to learn from experience, recognize and interpret visual and auditory inputs more accurately, and make complex decisions, leading to more dynamic and autonomous applications. State-of-the-art examples include autonomous warehouse robots using DL for efficient inventory management, robotic surgical assistants enhanced with precise computer vision for medical procedures, and customer service robots equipped with advanced NLP for engaging and helpful interactions in the retail and hospitality sectors.

Each of these higher-order technologies existed long before ChatGPT and have proven use cases in business and industry. The challenge for most leaders today is the breakneck pace of innovation. With all of the context presented here, it might be worth your time to revisit the AI Initiative Inventory I described in my previous post and make sure your strategy incorporates the full spectrum of potential use cases. Given the pace of change this should be a regularly updated document!

Let me hear your “natural language”.

What questions, criticisms, or suggestions do you have? Where would you like me to go from here? Please feel free to engage with me and the rest of the community in the comments below.

Postscript.

Artificial Intelligence on Wikipedia

Artificial General Intelligence on Wikipedia

Artificial Neural Network on Wikipedia

Transformer on Wikipedia

Reinforcement Learning on Wikipedia

Computer Vision on Wikipedia

Natural Language Processing on Wikipedia

Great article, Matt

This is such a helpful article! I love you how peel back all the layers...and then give us a few more layers at the end too.